Choose your own PHP version

One of our most common support requests recently is for PHP 8 on hosting accounts. Until now, our policy has been to run our hosting servers on a stable release of the Debian operating system, and to only install operating system-supplied packages. The ensures that we have a reliable, stable platform that it is fully covered by Debian’s security updates process.

Our hosting servers are currently on Debian 10 (Buster) which means PHP is stuck on version 7.3. Debian takes a pretty conservative approach to updates. Not so much “if it ain’t broke, don’t fix it” but more like “if it’s broken, but not a security hazard, still don’t fix it”. This is an excellent way to manage a stable, reliable operating system.

On the other hand, PHP 8 was released at the end of 2020, and it seems that an increasing number of developers are now dropping support for PHP 7 in their products. We find it odd that developers would drop support for a current stable version of what is probably the world’s most widely use server-side OS, but nonetheless we can’t ignore the increasing number of our customers who need a more recent version.

Choose your own version

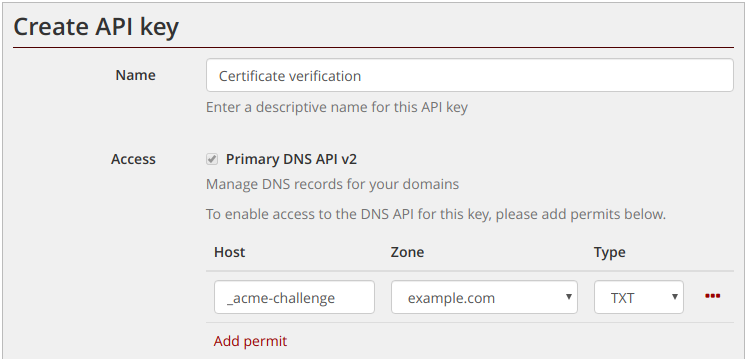

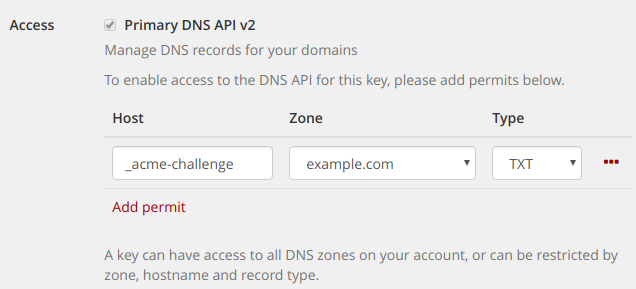

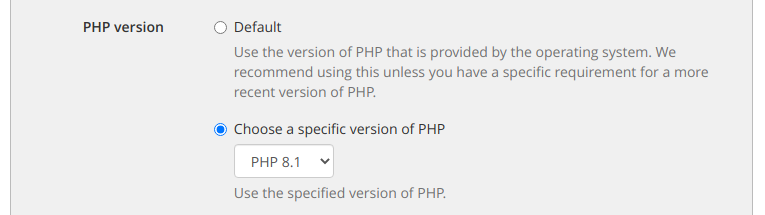

We decided that if we were going to support newer versions of PHP, we’re going to do it properly and it’s now possible for users of our hosting accounts to select which version of PHP they use using our control panel.

The PHP version can be selected independently for each website hosted, and changes take effect immediately, making it easy to test migrations to a newer version, and roll-back if problems are encountered.

Our hosting accounts support unlimited hosted websites, so if you want to test whether your site will work with a newer version, you can always spin up a staging site on a sub-domain and switch the PHP version for just that site.

Supported versions

We currently support PHP 7.3, 7.4 and 8.1 on our hosting servers, and are considering adding support for 8.0. If you have a requirement for a specific version, please drop us an email.

deb.sury.org

The thing that makes this possible is the excellent work of Ondřej Surý, long-term maintainer of Debian’s PHP packages. In addition to providing the official Debian packages, Ondřej also provides deb.sury.org, a private repository providing Debian packages for multiple versions of PHPs, built and maintained to the same standards as the official Debian packages.

The

The