Abusive AI Web Crawlers: Get Off My Lawn

As many other folks have reported in the last few weeks, we have also been seeing a huge increase in the amount of traffic from abusive web crawlers.

Automated blocking of abusive traffic has long been a necessary evil. We already block a number of badly behaved SEO and AI crawlers on our shared hosting servers and, on-request, some customer servers. We also have a number of automatic tools to block abusive clients. These are typically attempting to brute force passwords or run web security scanners, and we firewall IP addresses out after a number of suspicious requests. These crawlers and bad-actors can already outnumber real organic traffic but the scale of the recent activity, along with the lengths taken to frustrate automated blocking, are on another level.

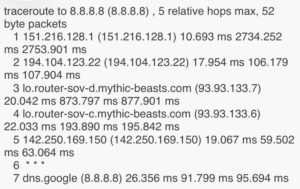

This new attack comes from a great many IP addresses, each making a tiny number of requests – often just one – from viable-looking but randomly generated User-Agents. We’ve had some success detecting and blocking these but this has not been without some problems. There have been periods where some of our servers have been struggling under the sheer number of connections they’ve had to deal with and some of the blocks we’ve put in place have impacted some legitimate users, especially those on very old computers. If this is you then we’re sorry you’ve been caught up in this.

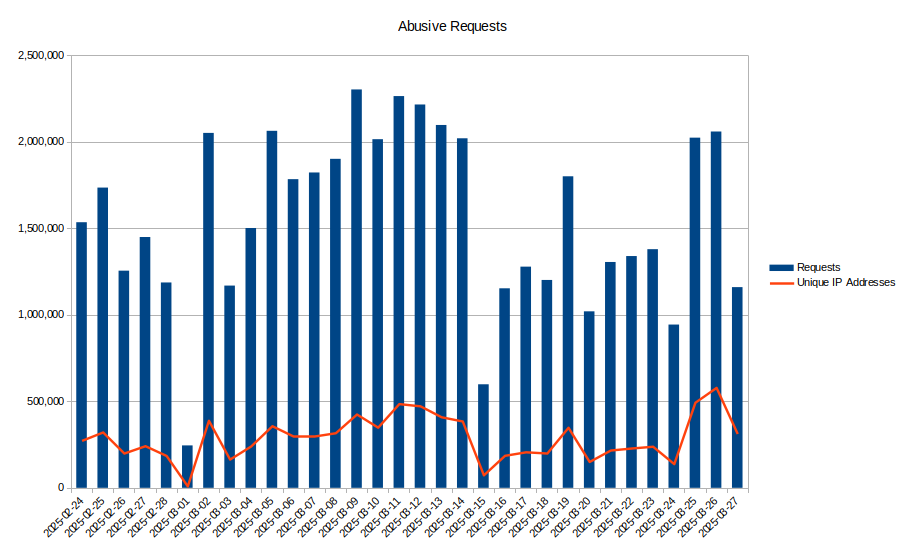

To give you some idea of the scale, one of our shared hosting servers has in the last month been averaging over 1.5 million fraudulent requests from 290,000 unique IP addresses per day. These are addresses that we have a very high confidence are not making legitimate requests. We’ve identified 5.1 million unique IP addresses during this period and 3.4 million of those have only made a single request, which has made it very difficult for us to block them proactively.

From these IPs, we’ve seen 2.4 million unique User-Agents, and again 1.9 million of these have only been seen once. We’d certainly be surprised if there are as many Windows 95 users left as we’ve seen in these logs. Especially with the ability to talk to a modern TLS-enabled website.

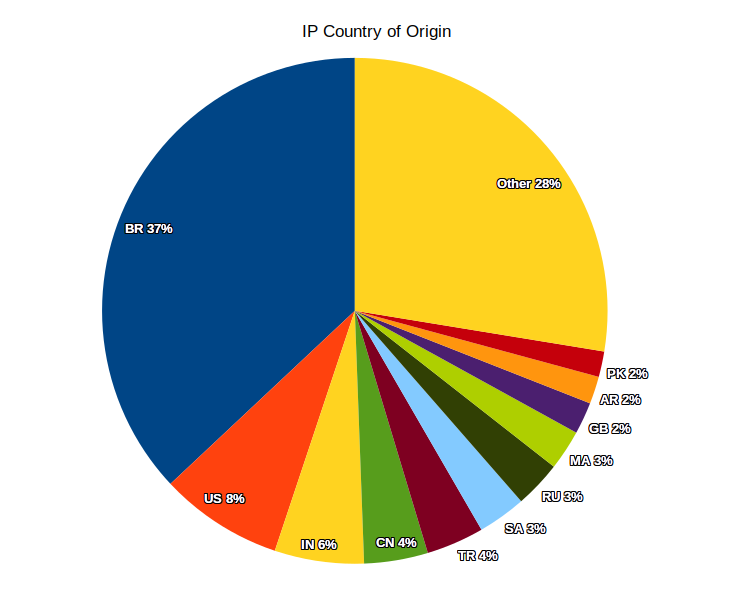

The vast majority of these requests are from consumer ISP networks from a wide variety of countries with Brazil being the biggest contributor by far. UK networks only make up around 2% of the attacks we’ve seen but it’s the same pattern – it’s the big consumer ISPs we see the most. We’ve been careful to exclude any IP addresses that also show what looks like legitimate activity here, so it’s possible this is undercounting, especially with the growth of CGNAT. Around 5% of the requests are from IPv6 addresses.

The rumours that this is a botnet mostly made up of compromised Android SetTop Boxes that’s been leased out to an AI crawler that’s trying to avoid being blocked seem likely to us, but we’re not impressed. This has been a significant waste of staff time over the last few weeks as we’ve worked to mitigate the impact on our customers.

If you’re an ISP that wants to know if you’re part of this botnet please check to see if your ASN is in this file then contact support for a copy of our logs. There are over 22,000 distinct ASNs in our data, with more than 200 of those networks based in the UK.

Thanks to bgp.tools for the network and country data.